The news that “Kubernetes 1.20 deprecated Docker” hit the IT industry in early December 2020. So far, this has resulted in Kubernetes delivering a deprecation notice. This relates to Dockershim in particular. Support for Docker will be phased off in version 1.22, which is scheduled for release in the second half of 2021. As a result, 2021 could mark the beginning of Docker’s demise.

Table of Contents

What are Docker and Kubernetes?

Docker allows you to bundle an application (for example, a JAR file containing compiled Java code) with the runtime environment (for example, the OpenJRE JVM) into a single image, which is then used to create containers. In fact, the Docker image includes all of the operating system’s dependencies.

This allows using the same package on the developer’s laptop, in both the test and production environments.

Kubernetes is an orchestration system that manages several containers and assigns resources (CPU, RAM, and storage) from a number of cluster machines. It’s also in charge of the containers’ lifecycle and their integration with Pods. When compared to Docker, it functions at a higher level because it manages several containers across multiple machines.

Kubernetes is similar to a hosting or cloud service, Docker container is comparable to a virtual machine. Docker (or Docker Compose) allows you to run multiple processes, group them together in a network, and assign storage to them all on the same computer. Within a cluster of a few machines, Kubernetes can perform the same thing.

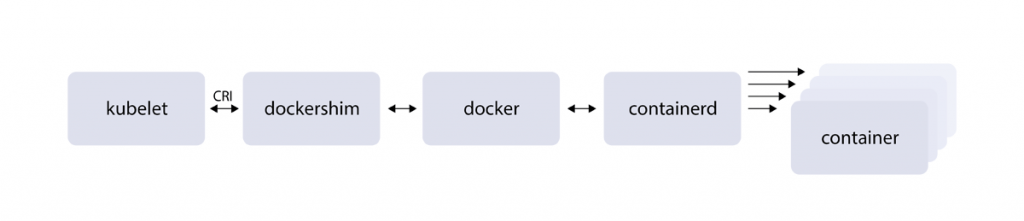

Kubernetes brings Docker down to the level of a component that runs the containers. These components can now be altered thanks to the establishment of the CRI standard (Container Runtime Interface). Only containerd and CRI-O are currently compatible with the CRI. Docker requires the dockershim adaptor, which is exactly what the Kubernetes programmers want to avoid.

If you’re a Kubernetes end-user, you won’t notice much of a difference. This does not imply that Docker is dead, nor does it imply that you can’t or shouldn’t use it as a development tool. Docker is still a helpful tool for creating containers, and the images generated by docker build can be used in your Kubernetes cluster.

If you’re using a managed Kubernetes service like GKE, EKS, or AKS (all of which are containerized by default), make sure your worker nodes are running a supported container runtime before Kubernetes removes Docker support in future editions. You may need to alter node customizations depending on your environment and runtime needs.

If you wish to create your own cluster, you’ll have to make some adjustments to avoid cluster failure. A Docker deprecation notice will appear in v1.20. If Docker runtime support is dropped in a future Kubernetes version (currently scheduled for version 1.22 in the second half of 2021), you’ll have to transition to one of the other compatible container runtimes, such as containerd or CRI -O. Simply ensure that the runtime you select supports the current settings of the Docker daemon (such as logging).

So, why people are panicking when they see this announcement?

There are two environments being discussed, which is causing some confusion. There’s a component inside a Kubernetes cluster called a container runtime that’s in charge of pulling and running container images. Although Docker is a popular choice for that runtime, it was not designed to be embedded into Kubernetes, which poses issues.

Docker is an entire tech stack, and one part of it is a thing called “containerd” which is a high-level container runtime by itself.

Docker is powerful — because it has a lot of UX enhancements that make it incredibly easy to engage with humans when performing development work, but because Kubernetes isn’t human, these UX enhancements aren’t necessary.

On the other hand, Kubernetes must use another program called Dockershim to access what it truly needs, which is containerd, because of this user-friendly abstraction layer. That’s not ideal because it adds another object that needs to be looked after and could be harmed. In fact, Dockershim will be removed from Kubelet as early as the v1.23 release, effectively eliminating support for Docker as a container runtime.

Why Dockershim is needed?

The Container Runtime Interface, or CRI, is not supported by Docker. We wouldn’t need the shim, and this wouldn’t be a problem.

The Kubernetes community proposed Dockershim as a temporary solution (thus the name: shim) to boost support for Docker so that it may be utilized as a container runtime. The deprecation of Dockershim solely indicates that Dockershim will no longer be maintained in the Kubernetes source base.

Do we keep using Dockerfiles?

This change addresses a different environment than most software developers use to interact with Docker. The Docker installation that is used in development is unrelated to the Docker runtime inside the Kubernetes cluster.

Docker is still helpful in all of the ways that it was before the update. Docker creates an OCI (Open Container Initiative) image, which isn’t truly a Docker-specific image. Kubernetes will recognize any OCI-compliant image, independent of the tool used to create it. Containerd and CRI-O both know how to fetch and run those images.

Evolution of Docker

Docker Engine has grown and evolved into a modular system over time. Components such as logging have been implemented in a variety of ways. This allowed for the application architecture to be standardized and simplified.

It was enough for an application to log to the Linux streams like stdout, stderr. Docker would gather and store the logs locally. It also gave users access to the interface via docker logs commands, which allowed them to read the logs.

For software engineers, having a single tool to view app logs written in Java, Node, PHP, or other languages is quite useful. Other considerations for IT Ops, who manage the systems, include the assurance that the logs will not be lost, their retention, and the speed with which they fill up disk space. This is a new set of issues, and Docker will not be able to fix them.

A single machine vs. a cluster

What works flawlessly on a single machine may not work so well in a cluster. Docker service logs, which are similar to Docker Swarm’s log viewer, are an excellent example (the cluster orchestrating system for Docker). Unfortunately, the logs are not displayed in chronological order in this scenario, which could be due to temporal variations between the cluster’s individual workstations.

Why Docker is incompatible?

Docker’s solutions may work for a single system, but they are not ideal for clusters of machines.

Technically, little has changed for software programmers. We’ll continue to create Docker images because they comply with the OCI specification (Open Container Initiative). This means that any compatible CRI, whether locally or in a cluster, will be able to run these images.

The most significant changes concern our understanding of containers. It’s time to stop thinking of a container as a “light virtual machine” and start thinking about cloud-native applications. What’s important to remember is that each container represents a single process and that resources, like files, are just transitory.

On the other hand, it’s time to start thinking of a container as a cloud-based instance of an application. We must keep in mind that there will be multiple copies of the app, and no one knows which system a container will be used on. As a result, we can’t rely on local files because the new container instance won’t have access to the old one’s.

So, should we worry about the fact the Kubernetes deprecates Docker?

The cloud business will expand, and more enterprises will migrate to the cloud. Application containers and horizontal scaling (for numerous machines) are a natural trend in this context. Perhaps we’ll just have to live with the complexities that clustering brings.

Even though it appears hard in and of itself, Kubernetes will make a lot of things easier if horizontal scaling is unavoidable. In fact, it has been extended because the problem it solves (allocating resources in a cluster) is fundamentally difficult. Kubernetes provides the basic tools and vocabulary to deal with this complexity in this scenario.

It can cause problems for some people, but it is not catastrophic. Depending on how you interact with Kubernetes, this may not make any sense to you, or it may mean some work. In the long run, it will make things easier.

Kubernetes-simplifying solutions will undoubtedly emerge over time. All cloud providers now provide a hosted Kubernetes cluster service, which spares IT Ops of many operating tasks. Because Kubernetes setup takes the form of declarative YAML files, it will be easy to build tools that will allow you to click your way across the clusters. Kubernetes YAML could be to clusters what HTML is to the World Wide Web.

Do you want to know how Migration to the Cloud can help your business develop?